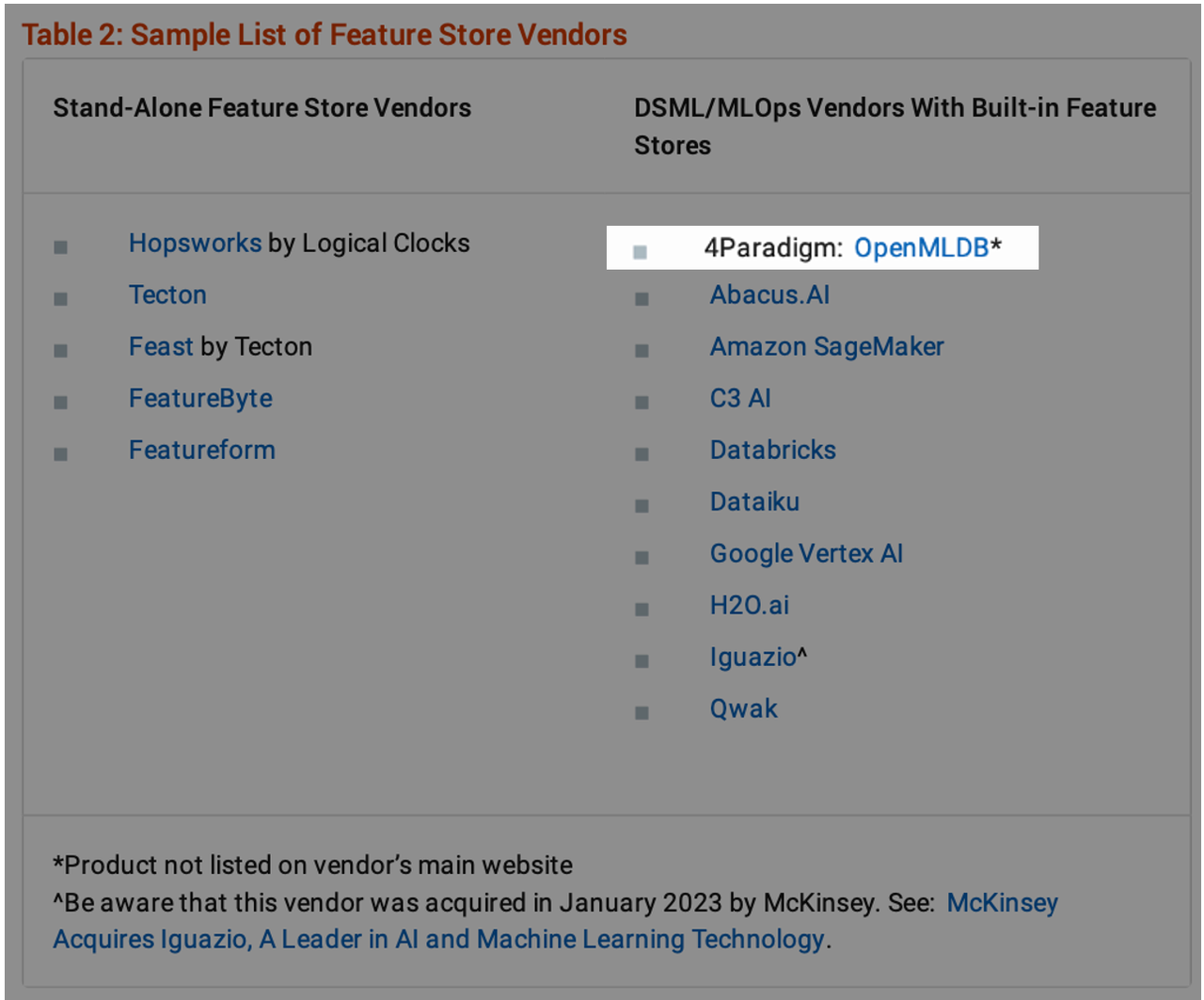

In the report "The Logical Feature Store: Data Management for Machine Learning" published by the International Authoritative Consulting and Research firm, Gartner, OpenMLDB is honored to be selected as the sole representative feature store vendor from China.

The report thoroughly analyzes the three major challenges faced by current machine learning applications in the practical implementation process: Low End-to-end Efficiency, Lack of Reusability, and Inconsistency between Training and Production Environments. This explains the urgent necessity of a feature store. Considering the challenges posed by the high complexity and resource allocation involved in developing feature stores, Gartner firmly believes that, compared to in-house development, seeking external procurement, especially purchasing from MLOps vendors with built-in feature stores, is a more cost-effective choice. In this regard, OpenMLDB has successfully been included in Gartner's recommended list of vendors for its outstanding performance, becoming the only Chinese ML vendor with a built-in feature store. This report provides valuable professional guidance for enterprises eager to expand the scale of their AI implementation in business.

OpenMLDB: Providing a Consistent Production-level Feature Store Online and Offline, Achieving a 500% Efficiency Improvement per Unit Cost

Gartner emphasizes the challenges of machine learning in practical applications in its report. Typically, machine learning teams in enterprises find themselves investing significant time in addressing data issues, leaving little room for focusing on actual model development and optimization. During this process, there is a notable prevalence of inconsistent feature definitions and frequent repetitive rework. Similar observations are revealed in OpenMLDB's research, "In the Realm of Artificial Intelligence Engineering Practices, Enterprises Often Allocate a Staggering 95% of their Overall Time and Effort to Tasks such as Data Processing and Feature Validation".

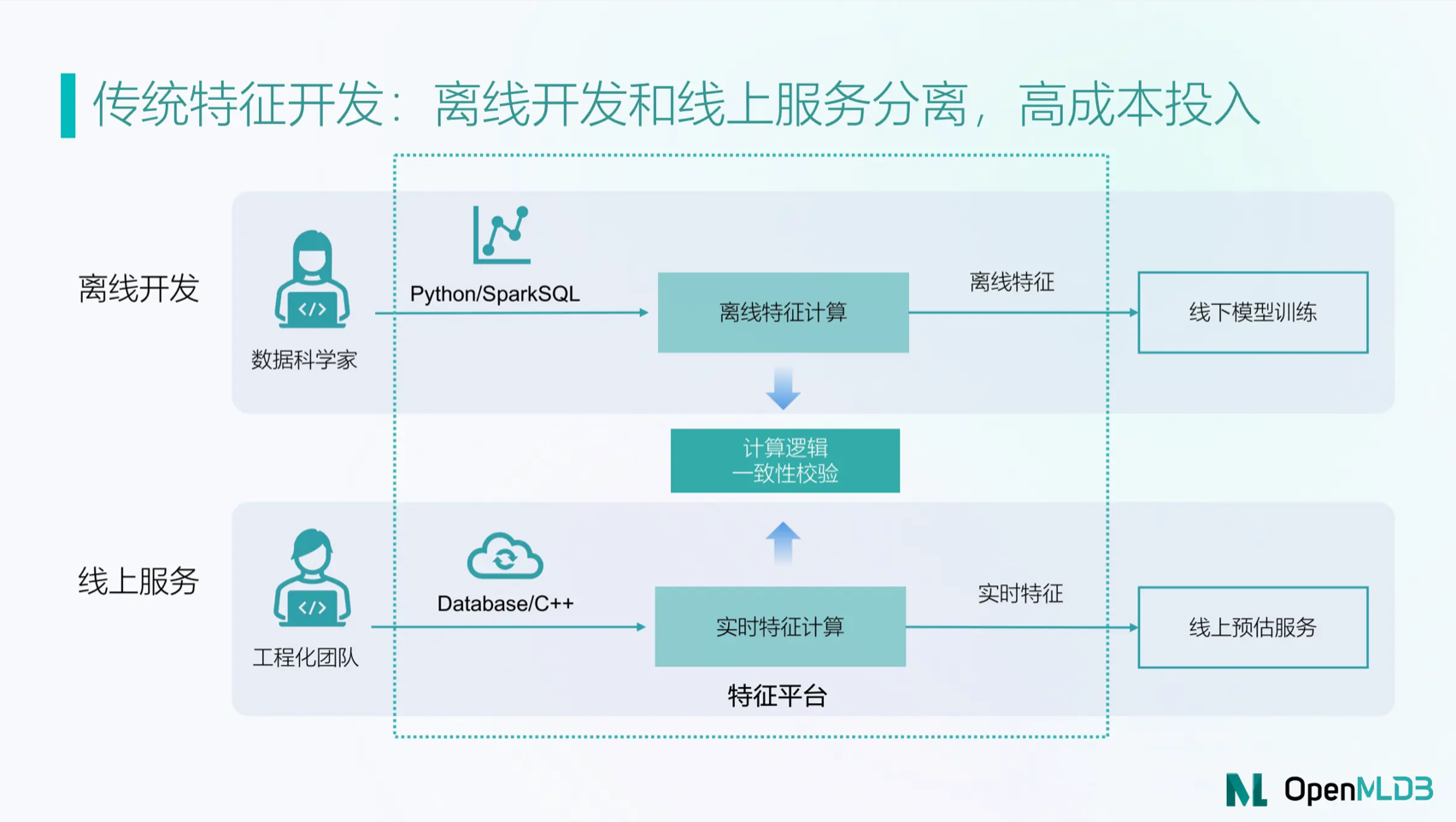

In the traditional approach without OpenMLDB, the deployment of real-time feature computations typically involves the following three steps: (1) Data scientists develop feature scripts offline using SparkSQL or Python; (2) As the developed offline scripts cannot meet the requirements of the production environment, the engineering team needs to reoptimize them based on a different tool stack; (3) Finally, there is a need for consistency validation of the offline feature scripts developed by data scientists and the online services developed by the engineering team. The entire process involves two groups of developers and two sets of tool stacks, resulting in significant deployment costs.

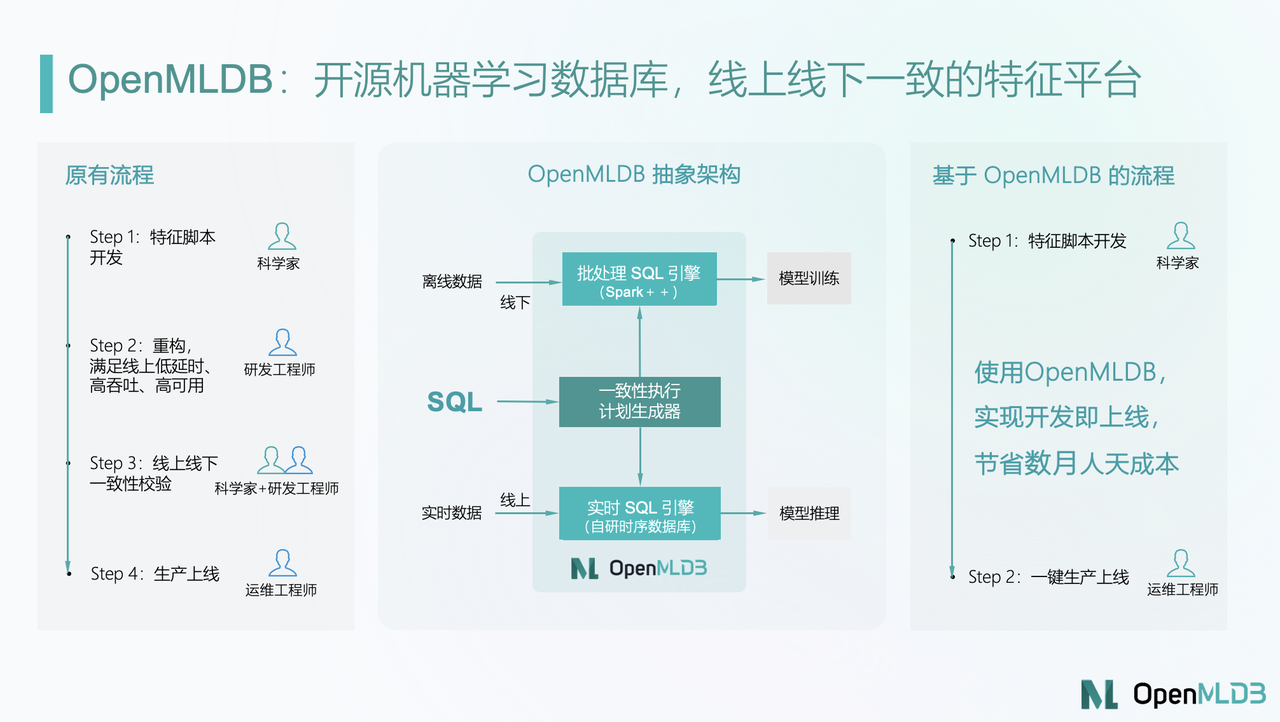

OpenMLDB aims for a seamless transition from development to deployment, allowing feature scripts developed by data scientists to be directly deployed in the production environment. The platform is equipped with both offline and online processing engines, with the online engine being deeply optimized to meet both production-level online requirements and ensure consistency between online and offline through an automatic consistency execution plan generator. Utilizing OpenMLDB, the implementation of machine learning applications in the feature phase involves only two steps: (1) Data scientists develop offline feature scripts using SQL, and (2) deploying the feature script to the online engine with a single deployment command. This approach ensures consistency between online and offline while successfully achieving millisecond-level low latency, high concurrency, and high availability for online services.

Therefore, the greatest value of OpenMLDB lies in significantly reducing the engineering deployment costs of artificial intelligence. In a larger business scenario, OpenMLDB can achieve a remarkable reduction from the traditional approach, where 6 person/ month was required, to just 1 person/ month. This results in a 500% efficiency improvement per unit cost by eliminating the need for the engineering team to develop online services and conduct online-offline consistency checks.

OpenMLDB X Akulaku: By Scenario-driven Approach, Windowed Feature Computation for One Billion Orders Achieves 4 Milliseconds Latency Performance, Saving over 4 Million Resources

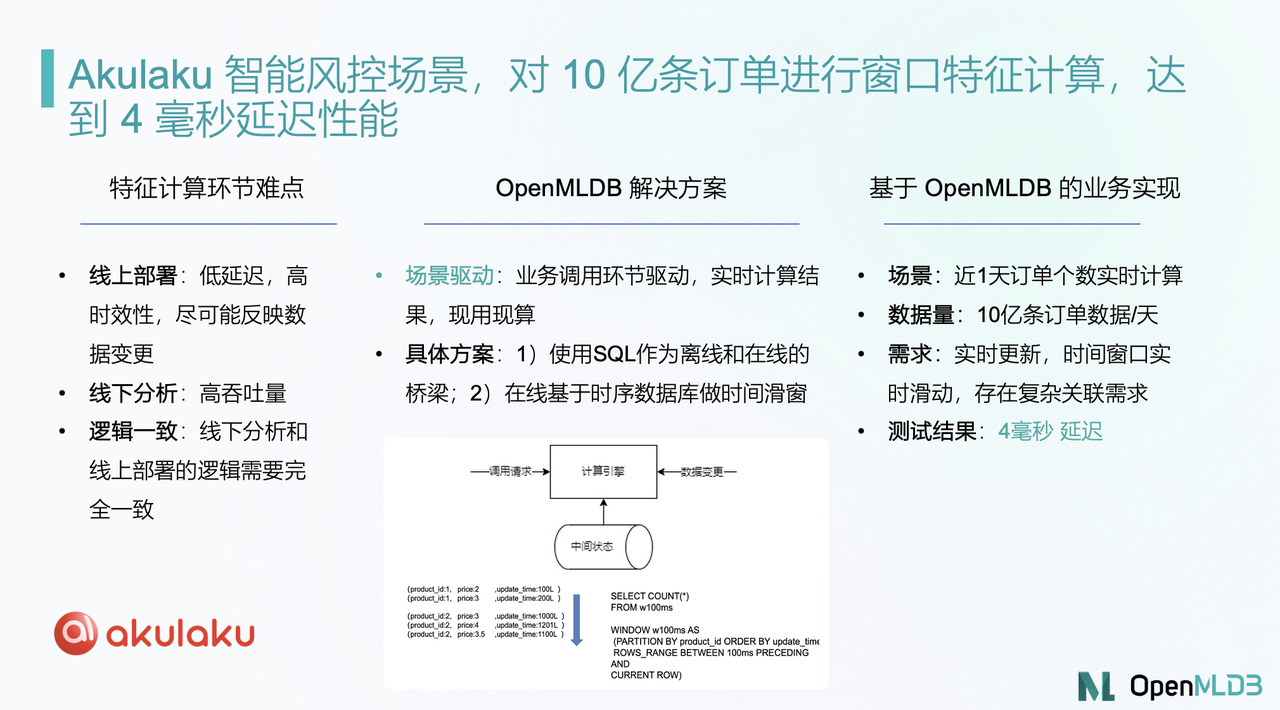

OpenMLDB is committed to addressing the data governance challenges in the implementation of AI engineering and has already been deployed in over a hundred enterprise-level AI scenarios. Among them, Akulaku, as a leading internet finance service provider in Southeast Asia, covers the entire e-commerce chain, with applications spanning financial risk control, intelligent customer service, and e-commerce recommendations. In numerous scenarios, Akulaku requires the implementation of corresponding AI applications. In the field of e-commerce finance, there is often a high demand for the feature store, requiring online deployment with low latency and high efficiency. It needs to reflect real-time feature computation for new data as much as possible, while offline demand analysis requires high throughput. At the same time, consistency between online and offline must be ensured. Meeting all three requirements simultaneously is a challenging task.

To address this challenge, OpenMLDB has assisted Akulaku in building an intelligent computing architecture. This involves embedding OpenMLDB's online engine into the model computation layer and embedding the offline engine into the feature computation layer. Through a scenario-driven approach, real-time computation results are invoked in the business calling process. This approach has successfully performed windowed feature computation for one billion orders, achieving a 4-millisecond latency performance and conservatively estimated resource savings of over 4 million.

In addition, OpenMLDB has assisted numerous enterprises in optimizing their database architecture, facilitating more effective implementation of AI scenarios. For example, it helped Vipshop reduce the feature development and iteration time for personalized product recommendations from 5 person/ day to 2 person/ day, resulting in a 60% improvement in feature development iteration speed. A leading bank's anti-fraud system utilized OpenMLDB for feature computation and management in offline development, online inference, and self-learning stages. This resolved long-standing issues of data traversal and inconsistent results, eliminating the need for expensive consistency verification costs. Huawei, after implementing OpenMLDB for real-time personalized product recommendations, achieved minute-level data updates and hour-level feature deployment. Looking ahead, OpenMLDB aims to assist more enterprises in addressing real-world challenges in data and feature processing for successful business implementation.

As the sole representative of a database feature store from China selected in the Gartner report "The Logical Feature Store: Data Management for Machine Learning," OpenMLDB will continue refining its product, optimizing performance, and leveraging its strengths in the field of database feature platforms. The aim is to liberate AI practitioners from tedious and inefficient data processing, assisting enterprises in achieving simpler and more efficient implementations of machine learning applications.

For more information on OpenMLDB:

- Official website: https://openmldb.ai/

- GitHub: https://github.com/4paradigm/OpenMLDB

- Documentation: https://openmldb.ai/docs/en/

- Join us on Slack !